Can we dream of AI public governance systems without gender biases, racists, or classist? What role should citizenship and the third sector play? Recovers the ideas shared by Karlos Castilla, Paula Guerra Cáceres and Paula Boet in the second session of the cycle «Artificial Intelligence, Rights, and Democracy: AI, public policies and discriminations».

Today, a total of 27565 people, have signed the open letter "Pause Giant AI Experiments: An Open Letter» which proposes a moratorium of at least six months on the development of artificial intelligence. The reasons behind the proposal are based on the accelerated pace of various artificial intelligences, such as ChatGPT. AI presents significant risks, threats, and vulnerabilities to our rights and freedoms, as well as to democracy itself, but also potentialities: we are facing an uncertain scenario where public sector regulations are more important than ever.

To speak today of artificial intelligence and the accelerated progress it presents, it is already part of society. But what happens when such an innovative technology, which should help us all equally, has biases that lead to discrimination or racism? In 2021 {a study developed by IE Universityrevealed that 64.1% of European people believe that technology is reinforcing democracy.

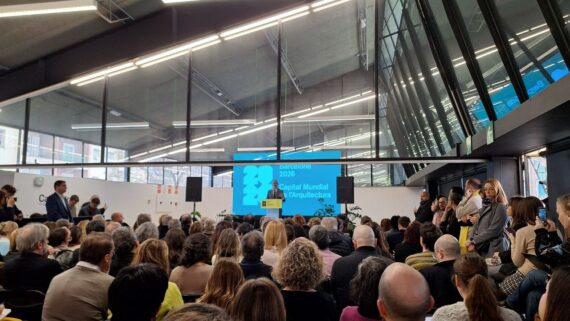

In this line, the second session of lecture cycle «IA, Rights and Democracy» of The Canòdrom – Democratic Innovation Center of Barcelona, the experts in ethics, politics, and technology Paula Guerra Cáceres, Karlos Castilla 0 and 1 Paula Boettalk about building an artificial intelligence of public governance, auditable and for the common good. The lack of training and disclosure of the algorithms of this technology in civil society raises a debate: how do people relate to AI, what rights do they acquire and what do they put at risk?nbspnbsp;nbspnbsp;

Political Interests and Discriminations inherent in algorithms

Report "Four angles of analysis of equality and non-discrimination in artificial intelligence (2022)» of the Institute of Human Rights of Catalonia and the Open Society Foundation, states that China, the United States and Japan, account for 78% of all applications for patents on artificial intelligence in the world. Africa and Latin America are the countries with the lowest levels.

The document proposes an analysis of the positive and negative effects of AI on equality and non-discrimination from four fundamental angles, answering the questions: where, who, for what and how this technology develops. In this line, Karlos Castilla, researcher at the 877192 Institute for Human Rights of Catalonia, presented the case of a talk about AI where the message was present in the images: attended by a majority of white men and a minority of women gender stereotypes and discriminations are not only codified in the technological systems. The expert indicated that such sites would be sites that generate discrimination.

Algorithms determining whether a complaint is false or predicting the level of probability of recidivism of a crime

Not only institutions and public administrations talk about the challenges of digital governance and the choice of artificial intelligence systems in public administration. It is also being done by civil society organizations that seek to collectively publicize and reflect on how these algorithms are designed and what solutions can be contributed from the perspective of rights advocacy in this digital age.

In this regard, Paula Guerra Cáceres de l'entity Algorace, which is dedicated to analyzing and investigating structural racism and its consequences in the digital field, presented the report "An introduction to AI and algorithmic discrimination for MMSS» (2022) which "is arising with the idea of bringing the AI's subject closer to the impact it has on racialized persons and groups", stated Guerra. New AI-related digital transformation processes, as well as public policies applying this technology, are regulated by ignoring the voice of organized civil society.

One of these cases is VeriPol, an artificial intelligence-based technology that uses natural language processing to analyze police complaints to predicts whether the complaint is false or not depending on the details given by the denouncing person and the morphosyntactic used in speaking. This algorithm has been strongly criticized for the fact that it was created with a very limited database, under the supervision of a single policeman, which does not guarantee a fair application that protects the rights of complainants. Another example is found within the prison system of Spain, which predicts the level of probability of recidivism of a crime having as variables neighborhoods, demographic groups and even the locality from which the person in question originates, reinforcing the discriminatory and punitive justice system.

Mechanisms to Regulate AI

Such cases are also seen in other countries on European territory. In the Netherlands it came into operation in the system SIRI, which detects and reveals possible frauds in the welfare, tax or social security system by means of a data evaluation of the families benefiting from state aid. This algorithm resulted in the «possible fraudster families» of the state, working and middle class families, promoting discrimination and segregation as well as an invasion of their privacy. A similar case occurs in Spain, with BOSCO, a software developed by order of the Government that electrics use to decide who is a beneficiary of the so-called social electric good.

In this sense, for to fight from the root the discrimination, racism and masclism involving the use of AI in our lives, the Barcelona City Council has created the protocol «Definition of working methodologies and protocols for the implementation of algorithmic systems» (2023). According to Paula Boet, of the Commissioner for Digital Innovation - BIT Habitat Foundation of Barcelona City Council «it is a pioneering document with regard to the regulation and governance of algorithmic systems at local level».

The protocol is based on the proposal for a European regulation on artificial intelligence and defines, step by step, the rights guarantee and safeguard mechanisms to be introduced at each time of the implementation of an artificial intelligence system by local authorities.

Artificial Intelligence, Communication and Journalism

AI has already been systematically introduced into communication, and in many different ways. Privately, we know it from smartphone voice assistants, for example, SIRI on mobile devices or Alexa in homes. In the field of communication, it has opened a window of opportunity, with a dark enough gap of challenges, such as ChatGPT or software that generates automatic images of digital creation. Do these new tools make the work of journalists and communicators easier or replace them? Where is the border?

18 May continues the cycle "IA, Rights and Democracy" with session "IA, Communication and Journalism"with Patrícia Ventura 0 (investigator in ethics, artificial intelligence and communication), 1 Judith Membrives (digitalization technique of Lafede.cat), Carles Planas Bou (a journalist specializing in technology in El Periódico) and Enric Borràs (subdirector of the Diari Ara). The four experts will put on the table what the use and standardisation of artificial intelligence means for the information and communication sector.